Hey, Leo speaking - the Owner of Monster Tycoon! In this blog post I'll be discussing how I went about designing and then developing Monster Tycoon's server infrastructure, so this essentially pertains to all the backend technical shenanigans and their systems.

If you're not really a techy person, that's OK! To sum this entire blog post up in simpler terms, Monster Tycoon needs to be developed to handle lots of players at once and to do that I've created a system that lets myself and our future team create new servers on the fly that players can join and play on, but the catch is that players won't even notice anything! The backend will ensure that players get connected to the next available server, allowing them to just continue playing as if they weren't previously connected to a different server.

Let's dive into how I've approached creating the infrastructure, going over the system design behind it and all the technical aspects. If you haven't read through the blog post on what Monster Tycoon is, I'd encourage you do so! You can also read up on how I've gone about creating Monster Tycoon.

Designing the Infrastructure Systems

Monster Tycoon's gameplay solely takes place on the players private island, which is simply a Minecraft world (1 world per island). Considering one world consists of a single player's island, the idea is that we can share multiple worlds across one Paper server and then scale these servers up or down based on the traffic Monster Tycoon is receiving. Although typically you may automatically scale these servers based on the amount of players that are online, I opted for manual scaling of these servers via a simple command that can be executed as at it works well for what Monster Tycoon is and allows for finer control over the amount of servers we have online (plus it's cheaper).

The next thing would be ensuring a player's data persists across multiple servers. Usually in a Minecraft network with dynamically scaling servers that must have persistent data, you would have to account for not only player and server data, but also the Minecraft world data which is where you may use a solution like Hypixel's Slime Region Format (which has been publicly remade by other developers), however, luckily for us this was relatively simple.

As previously mentioned, a player's island is situated in a Minecraft world, however, players can't build in this world (so no breaking or placing blocks), they can only interact with the various gameplay mechanics that are situated within the island itself. This means that only player data needs to persist across the different servers, not world data. To do this, everything is stored across MySQL (using HikariCP for high-performance connection pooling) and then we use Redis when we need to store things that need to be accessed in a quick and efficient manner or to establish communication channels for various data, such as communicating between the Paper server and the Velocity proxy.

The design behind this dynamically scaling Minecraft server system allowed me to achieve what I wanted to when it came to ensuring we could handle large amounts of players and that data persisted across servers so that it doesn't even seem like anything changed internally for the player. On top of that, it's a pretty common system for pretty much any service both inside and outside of Minecraft so there's plenty of resources to learn off of and figure out how to implement the system into Monster Tycoon's overall design.

Choosing How to Scale Servers

When I first started thinking of and designing this system, I had heard of Docker but knew barely anything about it and at that point figuring that out was at the lower end of my priorities, I first had to understand how the Velocity API works and how I could integrate scalable Minecraft servers using Velocity so I began experimenting with the Velocity API by just registering X servers to Velocity on start up, creating Velocity commands to manage scaling and utilising various other aspects of the Velocity API.

Once I felt I had a comfortable amount of knowledge on how the Velocity API works, I began figuring out how I could actually create these servers that the Velocity plugin was now registering. Initially, I decided to start with Python instead of using Docker as I was a bit more familiar with Python, however, in hindsight it would've been better to just start with Docker and learn from there, but regardless of what I would've used first, I still learnt a lot from starting with Python, such as what I needed to do from a technological standpoint to actually get the designed system functional.

Firstly, a Python script was created to clone a 'template server' and then automatically start it up, so this would act as the process that creates the physical server after the Velocity plugin registers the server to the proxy. I used Javalin (which is a web framework for Java and Kotlin) to allow for communication between the Velocity plugin and the Python script, so that once the server is registered to the proxy, a request is sent to the Python script via Javalin that tells it to create the physical server and start it up. This system worked well locally, but as I got deeper into development I began to realise that it wouldn't fit well for a production environment, and for the resources I had available, I needed something that had more of the built in tools necessary for a system that scales servers.

This is where Docker comes into play, which deserves it's own section below.

Learning & Using Docker

When the Python system was nearing a stage that I wanted it to be at, I spoke to a friend of mine who does a lot of system administration work for me (malatdu), and he mentioned Docker and was curious as to why I hadn't used it in the first place. It had been in the back of my mind when I began designing and then developing this system so I put a bit more thought into it, spoke to malatdu and realised that the best course of action to continue with this system would be through using Docker Swarm.

Now, I had heard of Docker and roughly knew what it was about, but that didn't put me in a position to know what I had to do to get the system functioning and so the learning process began. Malatdu had suggested the use of Docker Swarm, which is an orchestration tool that runs on Docker applications - so I had to learn about how Docker works, what Docker Swarm is and how to implement it into the Velocity plugin using the Docker Java API.

I began with a really bare bones system built directly into the Velocity plugin that used the Docker Java API to create Docker Swarm services for the Paper servers and register them with the proxy. Once I had that figured out, I faced my first issue with Docker; how do I let the containers connect to the MySQL and Redis servers, but then let my proxy which isn't containerised also connect to said MySQL and Redis servers? This is where Docker Swarm's handy overlay networks came into play (they are a type of network that allows for inter-container communication between multiple Docker hosts within a swarm). I had created a service for the MySQL and Redis servers and then connected both of those services and the Paper server's service to the same overlay network so that they could all connect to each other - then allowing MySQL and Redis to take outside connections I was able to connect the proxy that was on the host to the containerised MySQL and Redis servers. After setting up additional mechanics such as deleting services in the swarm, I had turned the initially barebones Docker system into a highly functional one.

However, despite it being functional (and even it being ready to be used in a production environment given a few further optimisations), I realised it didn't really work in the way Docker Swarm was intended to be used and that in order to get the most out of it in a more optimised and efficient manner, there were other ways I could go about developing this system. In Docker Swarm, a service acts as a high level 'manager' for applications within a swarm and just like a regular container, you assign an image to it, the targetted internal port and published external port, attach a network to it, etc. When you create a service, a single container is also created alongside it, however, you can have multiple containers under a single service (then you can update the service such as it's image and then it'll update all containers under the service as well) and these are called service replicas, where a single replica is a container within a specific service. In the Docker setup I had developed so far, each Paper server was it's own service, however, due to the way Monster Tycoon's gameplay is designed I could very well have a single service running for the Paper server and then replicate this service so that there are multiple Paper servers which allows for more fine-tuned control over the servers.

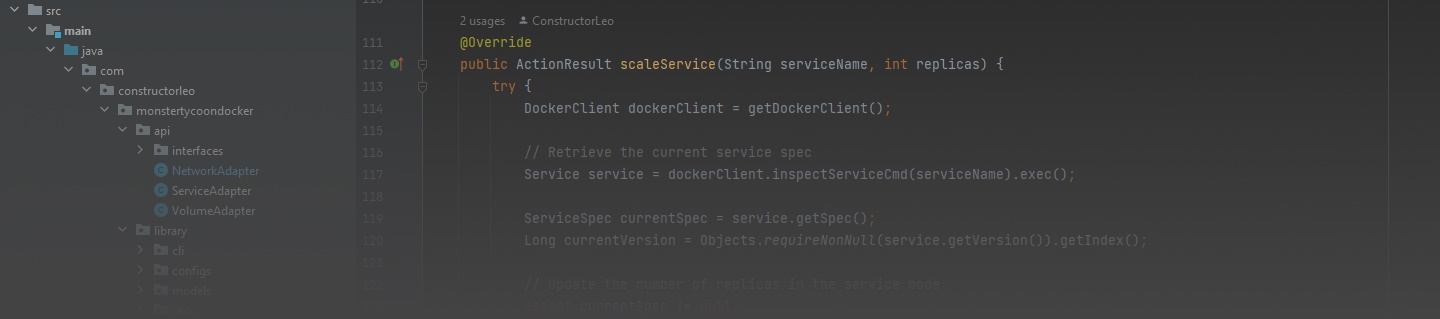

With that in mind, I first tried implementing the single service with multiple replicas for Paper servers into what I had already developed, but due to the fact that the proxy server was not on a service/containerised, it would be a lot more difficult to implement this so I decided to rewrite the entire Docker system from scratch and create a standalone Java application to handle all orchestration tools within a swarm. Implementing a system that allowed for better control over services, networks and even volumes (for MySQL and Redis), as well as better error/exception handling. In order to make use of this application, I created a simple API and published it to GitHub Packages so that the Velocity plugin could utilise the methods that allow for service, network and volume management. To ensure that the Velocity plugin was only responsible for creating and handling the services, networks and volumes related to the Paper servers, I also created a CLI that also allowed use for some of the other methods.

This new application has now allowed me to create a service, network and volumes using the CLI for the proxy, MySQL and Redis services and then from within the proxy service, the Velocity plugin can access the application's API to create a service running a Paper server, scaling the service replicas up and down as necessary. Now the swarm is being used in it's intended way and this entire Docker system allows for finer control over the Minecraft servers and other necessities.

What did I learn from this?

A lot, to put it simply!

The biggest take away I've had from this is that no matter how well you believe you've designed a system, you must also account for the technology that you plan to utilise in your systems design, whether that's through what the developers you're going to work with or what you will work with personally. I had accounted for how Monster Tycoon's infrastructure should be built, but I didn't account for what it will be built on, so when it came to that, the amount of time I spent trying to get this system functioning could've been more than halved had I actually properly planned out the usage of the tech behind it!

Regardless of the ups and downs, I'm so much more familiar with the more technical and backend side of game development and I also learnt a few very important things when it comes to systems design.

This was a very long blog so thank you so much for reading and I hope you're looking forward to the next progress update in 2 weeks time! 😄